Summary

- Radeon Chill cuts FPS during static moments to reduce GPU power use and temps, then boosts when action resumes.

- Performance impact is unnoticeable in games, but it works best in games where you can get a lot of FPS or have lots of static moments.

- Radeon Chill is incompatible with Anti‑Lag, so don’t use it in competitive, low‑latency games.

Gamers love to optimize their PCs to get the best possible performance in every gaming scenario. However, there’s one particular driver feature on AMD cards that most of us tend to overlook, even though it offers some surprisingly useful benefits. The feature in question? Radeon Chill.

What is Radeon Chill, and what does it actually do?

Radeon Chill isn’t a new feature by any means. It’s been available in AMD Software since 2018, so if you’ve had an AMD card in the last seven years, you’ve likely stumbled upon it in the “Gaming” tab. However, few people actually know what it does, so they tend to ignore it.

AMD Software gives a brief explanation of what it is. Put simply, Radeon Chill monitors what’s happening on your screen and lowers FPS during static moments. When the action ramps up, so does performance. This allows the GPU to use less power and produce less heat when nothing major is happening, but it quickly picks things back up once the action starts.

Once you enable Radeon Chill, you can set the Idle FPS and Peak FPS. The former is the target FPS when nothing major is happening on-screen, and the latter is the maximum FPS that Radeon Chill will target. Targeting a very low Idle FPS can yield serious power and temperature improvements, but it may start to hurt smoothness if you go too low.

Radeon Chill is dynamic, and it’s not to be confused with Frame Rate Target Control, which is a static FPS limit built into the drivers. You can use that too, but it only works in full-screen games, and even then, it may not always function correctly.

AMD suggests using Radeon Chill together with AMD Fluid Motion Frames (AFMF), another overlooked feature that uses an AI-optimized frame generation algorithm to potentially double your FPS. If you use both features at the same time, just make sure that your Idle FPS isn’t set too low, as it can cause noticeable FPS fluctuations.

All in all, Radeon Chill provides a clever way to lower GPU power consumption and temperatures without significantly sacrificing performance, and its integration with other AMD driver features is a big plus.

As you can imagine, this efficiency-oriented feature provides the biggest benefits for laptop and handheld users, but don’t dismiss it entirely if you’re on a desktop. The only AMD GPU I have is my RX 6800 XT, and I’ve recently started experimenting with Radeon Chill to see if it can offer similar benefits for desktop PCs.

Enabling Radeon Chill provided some interesting results

Let’s clear one potential bit of misinformation first—based on what you read about what AMD Chill does, you wouldn’t be wrong to assume that it could potentially lead to stutter or a less smooth experience when enabled. I had that preconception as well, since these kinds of features never work perfectly.

However, based on the few games that I’ve played with Chill enabled, I wasn’t able to tell that it was active at all, so you could say that it works pretty well. That said, it did lower my FPS substantially (from 300+ to 144) in one of the games, despite the peak FPS being set at 238 (2 below my monitor’s 240Hz maximum), more on that in a second.

Let’s now look at my tests, which were done on an overclocked RX 6800 XT with its power limits set to the maximum allowed by the drivers.

To establish a baseline, I used 3DMark, a demanding GPU benchmark tool. I got a similar score of around 3950, both with Chill on and off, but I ran into an entirely different problem—because this is an extremely intensive synthetic benchmark, Chill doesn’t really get a chance to kick in. That’s why I essentially saw the same figures in both tests (within the margin of error): an average GPU temperature of 68°C, a GPU hotspot temperature of 86°C, and a total graphics power (TGP) draw of 289W. At least now I know how hot my GPU gets and how much power it draws when pushed to its max.

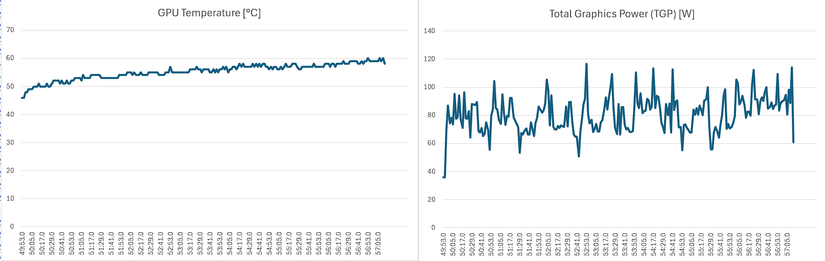

My next test was using Marvel Rivals. It’s a fairly demanding, fast-paced game that pushes my GPU to its limits. I didn’t have high hopes that AMD Chill would do anything, and just as I expected, the results were literally identical—a GPU temperature of 70°C, hotspot temperature of 82°C, and a power draw of 227W. The only difference was that I got 140 FPS with Chill enabled vs. 131 with it disabled, but that could very well be down to the map and what happened in the two different games (vs. bots).

I can’t say that I was too surprised by this result, as there hasn’t really been enough static content on screen at any point for Chill to make a measurable difference. At least we know that it’s not throttling performance, so that’s great.

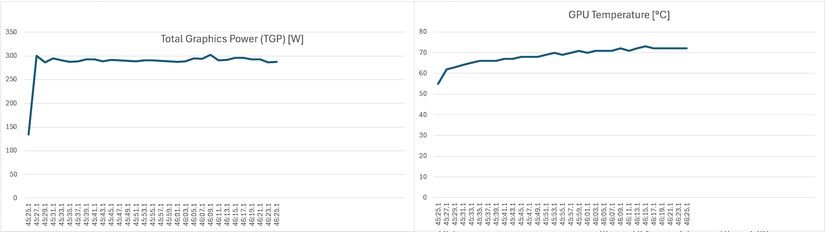

My last test was by far the most interesting because it involved a game that I knew my graphics card could max out without breaking a sweat, a popular indie title called Hades II. Although it’s a visually stunning game, its art style allows my FPS counter to remain glued to my monitor’s maximum refresh rate. I had high hopes that Chill could actually make a difference, and trust me, it did. By the way, I played through the same sequence to keep things consistent, so the results are accurate.

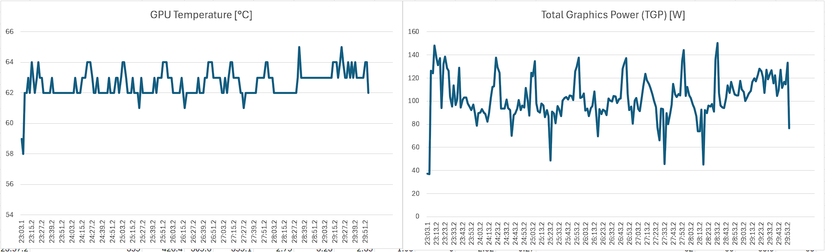

The first test, done without Radeon Chill, gave me the following results: an average framerate of 359 FPS, average GPU temperature of 63°C, GPU hotspot temperature of 69°C, and a total graphics power draw of 103W. I was a bit shocked to see 359 FPS because I thought my driver FPS cap would limit it to 238, but I suppose it disengaged because the game runs in borderless mode.

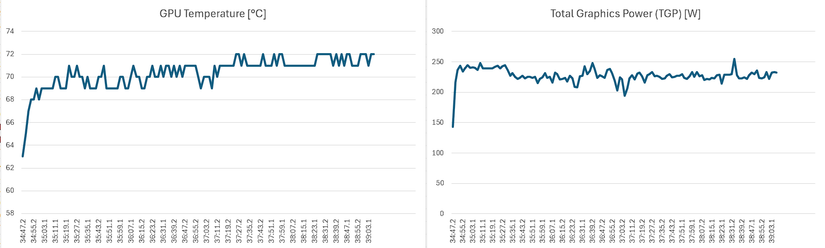

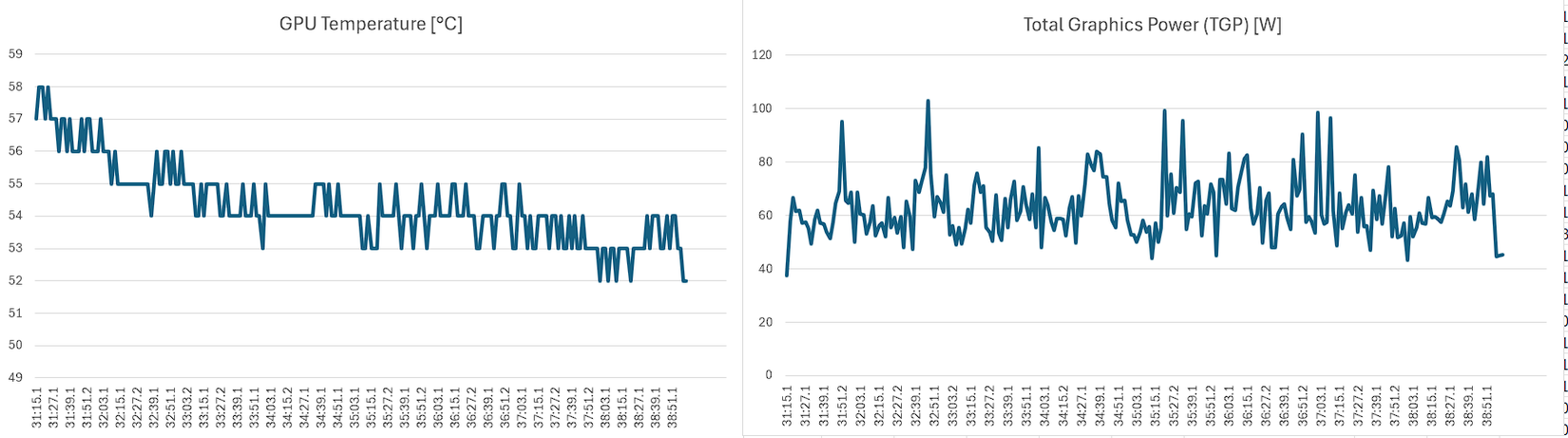

Once I enabled Radeon Chill, I got the following results: an average framerate of 145 FPS, average GPU temperature of 54°C, GPU hotspot temperature of 59°C, and a total graphics power draw of 63W. I was surprised to see such a low FPS after playing because I didn’t notice a significant difference compared to 240 FPS (goes to show how little 240Hz actually matters to the average gamer). Unsurprisingly, the graphics card temps and power draw were significantly lower with Chill enabled. The power draw difference alone is 40W—enough to power an incandescent light bulb!

To fix the low FPS, I tweaked the idle FPS to 218 instead of the 75 that Chill defaults to and got the following results: an average framerate of 235 FPS, average GPU temperature of 55°C, GPU hotspot temperature of 61°C, and a total graphics power draw of 81W.

That’s still quite a solid temperature and power draw improvement, and the fact that the average FPS was 235 and not 238 tells me that Chill kicked in a few times during static content, such as when choosing boons or pausing briefly between encounters.

I know, an 8°C drop at such a low temperature and 22W less power consumption isn’t world-changing, but it’s still a much bigger difference than I expected. Those slightly lower temps mean less stress on the GPU and its cooling components, and the lower power draw adds up over time to save a few bucks on your electricity bill.

You should enable Radeon Chill, with a caveat

Given that Radeon Chill doesn’t actively hurt FPS, I don’t see a reason not to enable it. Well, except for one: Radeon Chill and Radeon Anti-Lag are mutually exclusive. Anti-Lag is an important feature for competitive games, as it reduces input lag by adjusting frame timing.

If you want to take advantage of Radeon Chill on your system without sacrificing input responsiveness, only enable it in games where you don’t need those few extra milliseconds of lower input lag. In fact, based on my test results, you should probably only enable Radeon Chill in less demanding games where you can easily max out the FPS, as well as games with a lot of static content, such as story-driven titles and RPGs where you stand idle often.

Also, don’t forget to play around with the Idle FPS and Peak FPS settings, as going too low on the Idle FPS or above your monitor’s refresh rate on the Peak FPS might not yield the best results.

- Brand

-

Sapphire

- Cooling Method

-

Air

Don’t have an AMD graphics card, but find AMD Chill a compelling feature? Get the RX 9070 XT for your gaming PC.